We had a guest lecture from Sid Sen on computational complexity and algorithm analysis.

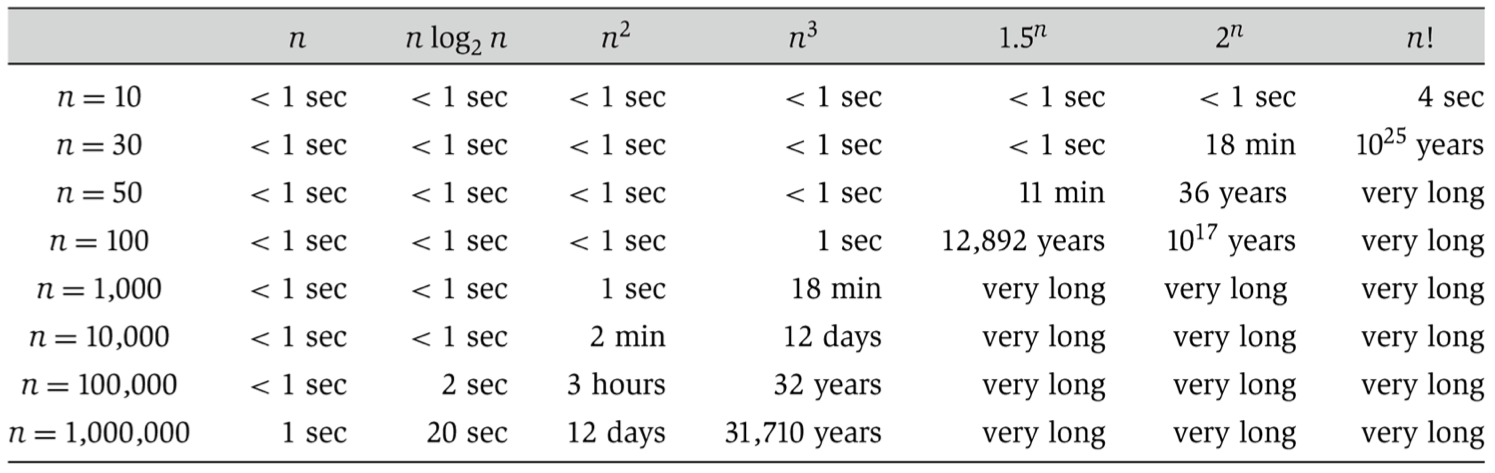

Sid discussed various ways of analyzing how long algorithms take to run, focusing on worst-case analysis. We discussed asymptotic notation (big-O for upper bounds, big-omega for lower bounds, and big-theta for tight bounds). The table above, from Algorithm Design by Kleinberg and Tardos, shows how long we should expect different algorithms to run on modern hardware. The key takeaway is that knowing how to match the right algorithm to your dataset is important. For instance, when you’re dealing with millions of observations, only linear (or maybe linearithmic) time algorithms are practical.

A few other references:

- A beginner’s guide to big-O notation

- Another introduction to big-O

- The big-O cheatsheet

Sid finished his lecture by discussing how this applies to something as simple as taking the intersection of two lists, useful for joining different tables.

A naive approach of comparing all pairs of elements takes quadratic time.

It’s relatively easy to do much better by sorting and merging the two sets, reducing this to n log(n) time.

And if we’re willing to trade space for time, we can use a hash table to get the job done in linear time, known as a hash join.

We used the second half of lecture to discuss data manipulation in R, specifically focusing on using dplyr from the tidyverse for a convenient implementation of the split / apply / combine framework.

We started this lecture with a brief tour of using the RStudio IDE.

In particular, we focused on Hadley Wickham’s latest tool, dplyr (CRAN, GitHub), which provides a particularly nice implementation of the split/apply/combine paradigm.

Source code is available on the course GitHub page.

There are lots of R resources available on the web, but here are a few highlights:

- CodeSchool and DataCamp intro to R courses

- More about basic types (numeric, character, logical, factor) in R

- Vectors, lists, dataframes: a one page reference and [more details]

- Chapters 1, 2, and 5 of R for Data Science

- DataCamp’s Data Manipulation in R tutorial

- The dplyr vignette

- Sean Anderson’s dplyr and pipes examples (code on github)

- Rstudio’s data wrangling cheatsheet

- Hadley Wickham’s R style guide